Designing Conversation Flow Builder at Smartbeings

SmartBeings Inc. is a Voice AI startup based in Silicon Valley. The company aims to bring the convenience of voice AI technologies to enterprises. I worked with Smartbeings team as a UX Design Consultant for more than a year from mid-2017 till late 2018.

One of the projects I was part of was to design a conversation flow builder: a platform enabling enterprises to build voice apps for their consumers. e.g. Dominoes wants its customers to order pizza by talking to an AI assistant.

Back then in 2018, Smartbeings was a midsize startup (~25 employees). Being the only design person in the team, I teamed up with Sharon Rasheed (Product Manager), Shreyas (Lead Developer) and Rajesh Shanmugam (CTO) to design the foundation of the Enterprise platform.

Being a large project, I will be focussing on the conversation flow-builder in this post. Will briefly discuss the structure of the conversation, it's building blocks and then move on to information architecture, the user story and finally, the user interface.

The structure of a conversation

Consider a conversation between a human (say Sam) and a machine (let's call her eM). eM is sitting idle, doing her machine things.

Just like a real human, to get eM's attention, Sam has to call eM in some way. This action is done using a wake word. Wake words are prevalent in consumer products (e.g. okay Google, Hey Siri)

eM is now listening. Next, Sam tells eM what she wants her to do. This can be anything, like setting an alarm, booking a cab or ordering pizza.

First, eM converts Sam's voice command to text. Then, for eM to understand what exactly Sam wants, she looks for Intent in her request. e.g. If Sam says "Set a reminder", the intent here is to add a reminder.

Now eM knows about Sam's intent to add a reminder. But there are still few things unclear about this intent, like what to remind (reminder name) and when to remind (reminder time). These are called Intent Parameters. Intent Parameters can be mandatory or optional (have defaults).

If eM is able to identify the intent but does not have mandatory parameters, eM asks Sam about those missing parameters. Once all the required parameters are in, eM processes the request.

To make sense of each intent, eM needs to scan through her knowledge base about what each intent parameter dictates. This knowledge base is called Entities e.g. 24 hours clock for time or days and months to map dates.

Once eM is ready with a response, she conveys it to Sam. She can say it with a voice, display it over a screen or convey it with any other communication medium she is capable of.

Information Architecture

From above chart:

Domain: Smartbeings serves multiple enterprise customers at once. Each enterprise customer is assigned one domain. Each domain has its own assistant name, enabling unique wake words.

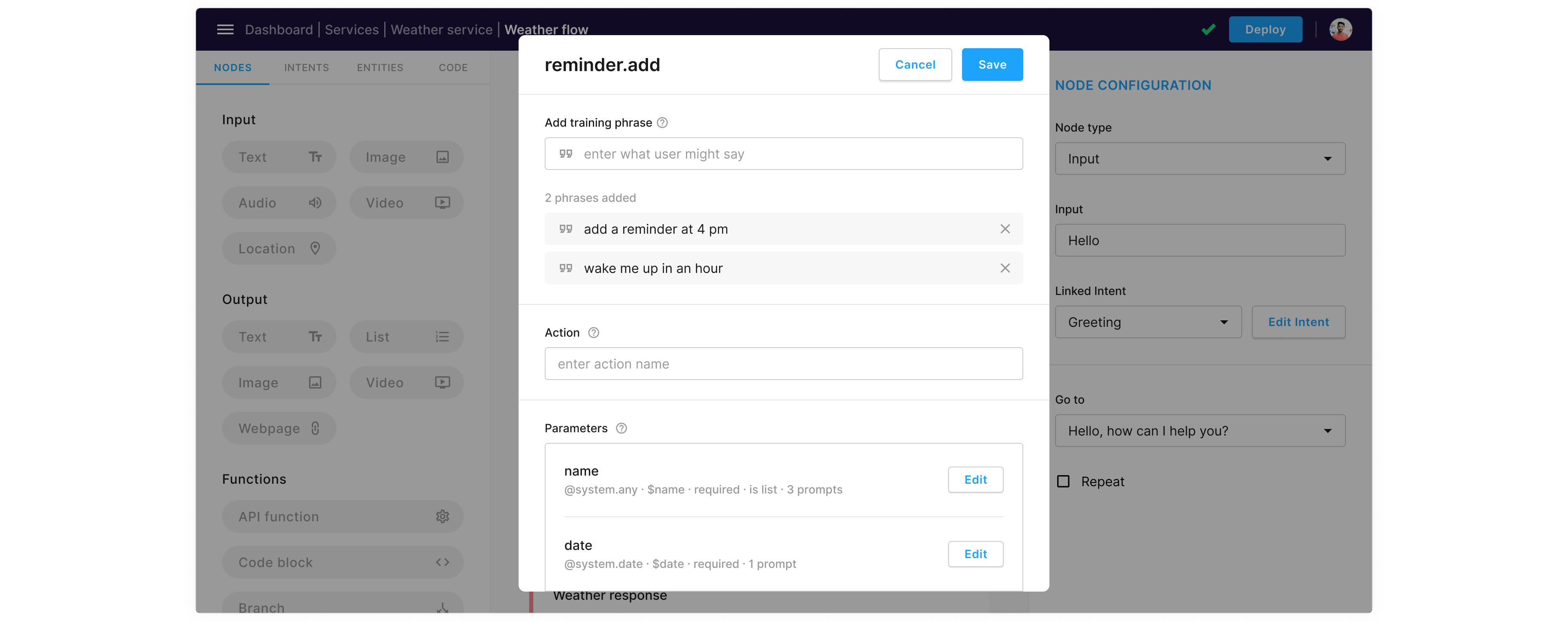

Intent: An intent represents the purpose of users' voice input. Companies need to add training phrases and intent parameters suitable for their use cases.

Training Phrase (Utterance): The same intent can be conveyed in multiple ways. e.g Book a cab to the airport or Find me a ride to the airport. Linked to an intent, training phrases or utterances are different ways a user can phrase her requests.

Intent Parameter: Intent parameters are values extracted from users' voice input to make sense of the request. e.g. Cab, ride, airport

Entity: Each intent parameter is mapped to an entity. The entity is the type of information to be extracted from the user's input. e.g. the intent parameter airport is mapped to an entity @airport that stores a list of all airports across the world.

Service: A service is any task a company (Enterprise Customers) fulfils using the assistant. e.g. Booking a cab, ordering food, changing light settings in a room, etc.

Conversation flow A service is a group of one or multiple conversation flows. Conversation flow is a visual representation of the order in which the AI assistant will take in user input, process the request and respond.

Conversation Flow Builder

Building a conversation flow is like writing a script for the dialogue that may happen between a user and the assistant in a particular scenario. For a given dialogue, a conversation designer cannot predict one definite path.

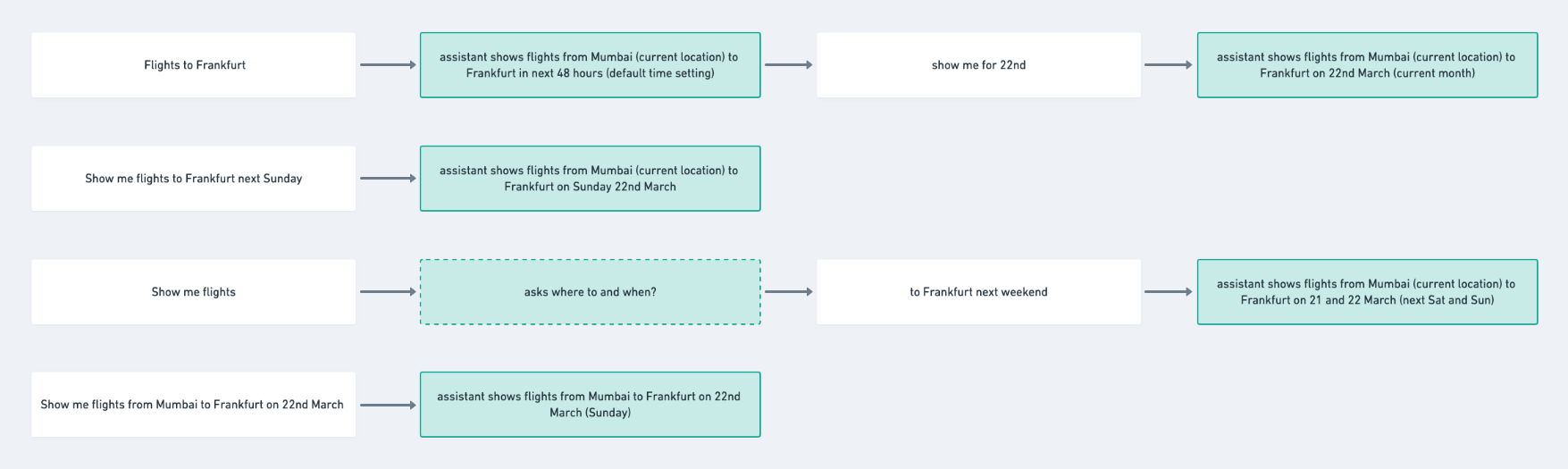

The diagram below shows multiple paths in which a single end goal can be achieved - searching for flights.

However, the conversation designer can create checkpoints in the dialogue. Each checkpoint will help the system to map progress in the conversation and guide the user to the end goal.

Nodes

Nodes are the checkpoints in the conversation flow. Nodes can be categorised into three types:

- Input Node: Information taken in by the assistant is defined in the input node. In most cases, it is the user's voice command, but it can also include other triggers like location, a specific sound, etc.

- Output Node: Information conveyed by the assistant is defined in the output node. Output can be textual, auditory or visual based on assistant capacity and use case.

- Function Node: Any activity to be performed other than taking in and conveying information is defined in the function node. e.g. calling an API, running a custom code block, branching, etc.

User Story

As a conversation designer, I want to:

- Define how a conversation starts - by voice command (in most cases), but also location, external sounds, etc.

- Connect that input to an intent

- Mention different variations of the same (in case of voice command)

- Link the input to the next checkpoint in the conversation (in some cases, the assistant can directly respond, or the input command may need some additional processing before a response)

- Diverge the conversation into more than one path to accommodate for conditional responses

- Make API calls at any step in the flow

- Add a custom code block at any step in the flow

- Define what and how the assistant responds

- Test the conversation flow in parallel

The Interface

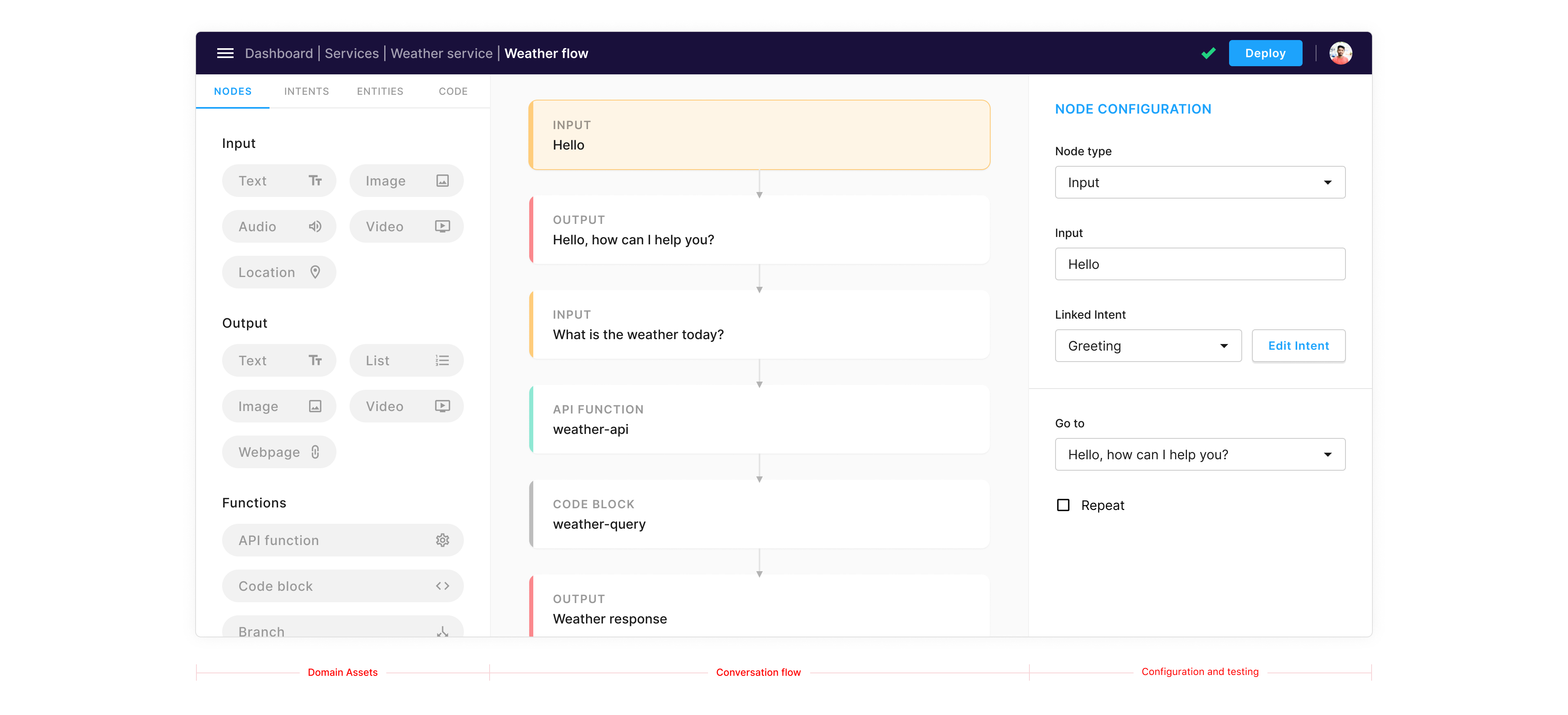

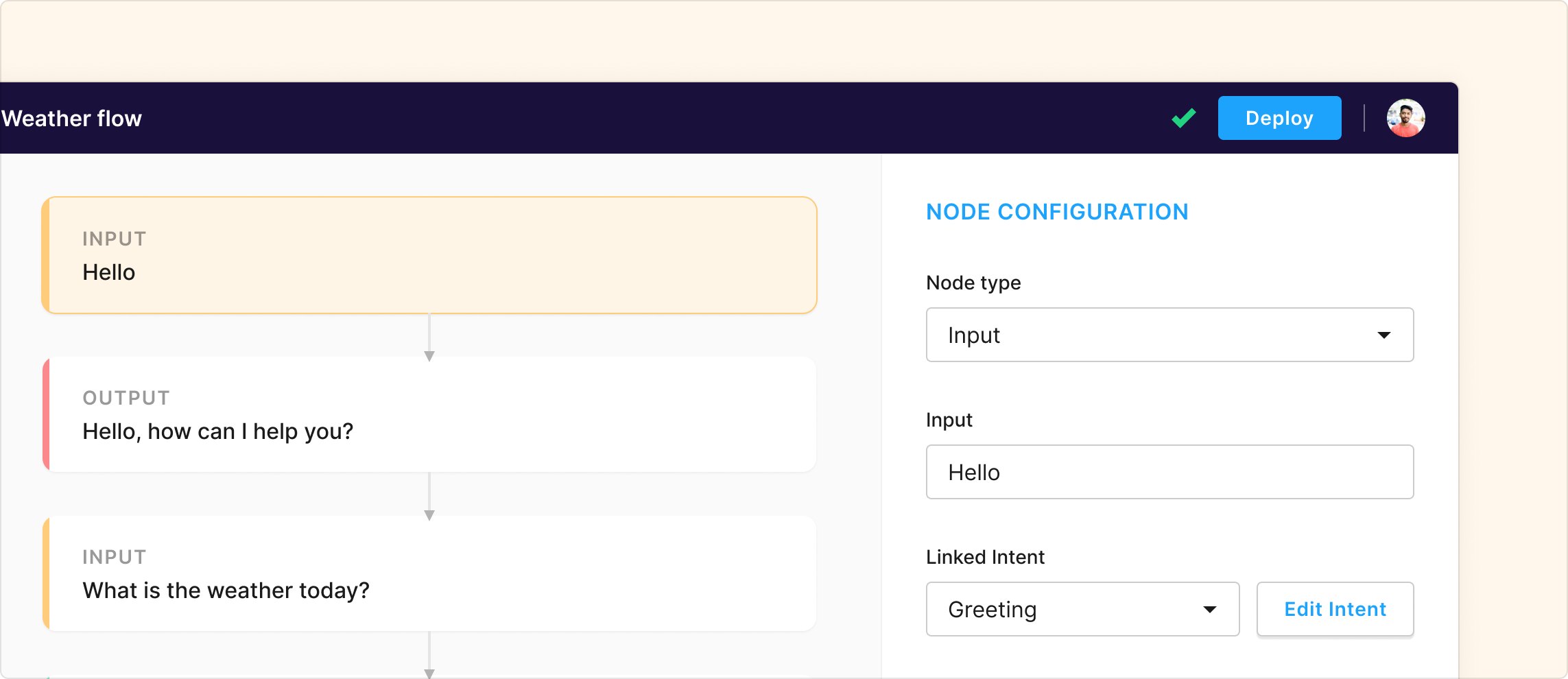

The primary principle while designing the flow builder interface was to enable conversation designers to explore, design and test the flow in one single place; with minimum loss of context at any step.

The interface has a 3 column grid:

- Domain assets - nodes, intents, entities and code

- The Flow chart

- Node Configuration and Testing

1. Domain Assets

Domain assets include a repository of intents, entities and code-blocks relevant to a particular enterprise customer. Having them one-click away becomes handy while designing the flow.

The modal layout enables the conversation designer to tweak intents, entities and code-blocks, without losing the context of the flow.

Also, for friction-free onboarding, the interface has clear instructions and help content at every step.

These domain assets have a separate page for themselves too, in-case someone from the enterprise team does not have permission to build flows, but wants to update a particular intent, entity or a code-block.

2. The Flow Chart

The conversation flow chart extends vertically with flow trigger starting at the top. The conversation designer can attach subsequent nodes in the flow by drag and drop interaction.

Branching

It's rare for a conversation flow to follow a single path. In many cases, more than one path has to be created to factor in multiple conditions. e.g. separate paths for Book ride now and Schedule a ride in the same booking flow.

Condition-based branching enables the conversation designer to diverge the flow into more than one path.

Conversation designer can simply drag and drop the node on the same level to create a branch. She can then go on to add respective conditions in the branch node configuration panel on the right.

3. Node Configuration and Testing

On dropping the nodes in the flow chart, the user can configure node properties in the column on the right.

Some properties are common to every node (e.g. go-to, add branch), while others are specific to node-type (link intent for input node). Also, each node type is colour-coded for visual reference.

Response

The assistant response is defined in the output node. The type of response varies from a case-to-case basis and also depends on the assistant hardware capabilities. e.g. an assistant device may have voice output, but no display; or a display with just text rendering and no image rendering, etc.

The conversation designer can define output in the form of voice, text, list, image, video or a web-page. Depending on device hardware capabilities, the assistant can automatically choose a suitable format(s) to convey information.

Flow Testing

It's a difficult task to perfect a conversation flow in one go. The conversation designer has to carry out back and forth testing to ensure a smooth end experience.

In parallel to adding and configuring nodes, the conversation designer can run tests in the same UI. If no node is active/selected, the right sidebar switches to test mode allowing her to test the flow.

Handling Errors

Clear visibility of warnings and errors is imperative for a conversation designer to rectify any mistakes while designing the flow. The error messaging is categorised into 2 types:

- Node error: Error pertaining to a particular node configuration is a node error. These errors are displayed on the respective node card in the flow.

- Flow error: Error pertaining to the flow as a whole is a flow error. This error status stays on the header adjacent the

Deploybutton. If everything is fine, it just shows a green check icon.

Testing and Development

The company had a separate team to take feedback and communicate upcoming features to its enterprise customers.

On the other hand, the development team was always in the loop from day 0; helping me to iron out any technical flaws and navigate through technical limitations as we made progress.

• • • •

Special thanks to Sharon Rasheed, Shreyas and other members of the development team who contributed to this project. It was a great learning environment for everyone involved.